While a computer is mechanical, it can perform many of the same mental tasks as a human brain. Memory is one of the most important functions of the human brain; it allows us to recall experiences and facts from the past, and is why people have long-term memories. Like humans, computers have memory, which allows them to retain information for later recall.

Memory is the heart of logic. Whether humans or machines, we can only do something with a place to store data. For this reason, memory has always been one of the most important parts of computer design. Most people think of RAM when they hear “memory,” but that wasn’t how things started.

This article will provide a quick overview of the development of random-access memory (RAM) and an explanation of the most common forms of RAM in use today, such as DDR3 DRAM and others. It also discusses how current RAM technologies stack up against potential successors like Z-RAM and TT-RAM.

What is RAM?

Computers have a physical component called random access memory or RAM, pronounced as ramm, that stores data momentarily and is known as the “working memory” of the device. Increasing a computer’s amount of RAM allows it to process more data simultaneously, which typically impacts overall system performance. RAM, or random-access memory, is a type of computer memory that allows for fast reading and writing. To access information quickly, your computer stores it in RAM rather than reading it directly from the hard drive.

RAM is like a hard drive, which can store data and be accessed quickly. A desk is a place to keep things you use frequently and need to access quickly, such as papers, pens, and paper.

Similarly, random access memory (RAM) stores all the information you’re now utilizing on your computer, smartphone, tablet, etc. As the desk in the comparison, this memory type allows for quicker read/write times than a hard drive. Because of mechanical constraints like rotation speed, hard drives are typically much slower than RAM.

A Quick Look into the History of RAM

Early computers had an entirely different idea of memory than modern computers. Relays, mechanical counters, or delay lines handled early computers’ primary memory functions. Most people who have studied computer science know that vacuum tubes, like the Cathode Ray Tube displays, were used in these early computer systems. Then came the transistor era, which Bell Labs brought about.

It all began with simple Latches, a transistor circuit configuration that can store one bit of data. The transistor became the fundamental building block of recent memory. Flip-Flops, a type of latch that arose, may be stacked to create the Registers utilized in many static memory cells today. Another method connected a transistor and a capacitor, enabling a smaller and more portable dynamic memory.

The RAM has undergone numerous revisions that have increased its power and streamlined its structure, resulting in the tiny yet sophisticated technology we are familiar with today. So, let’s examine the complete history of RAM, from its inception to the most recent, cutting-edge DDR5.

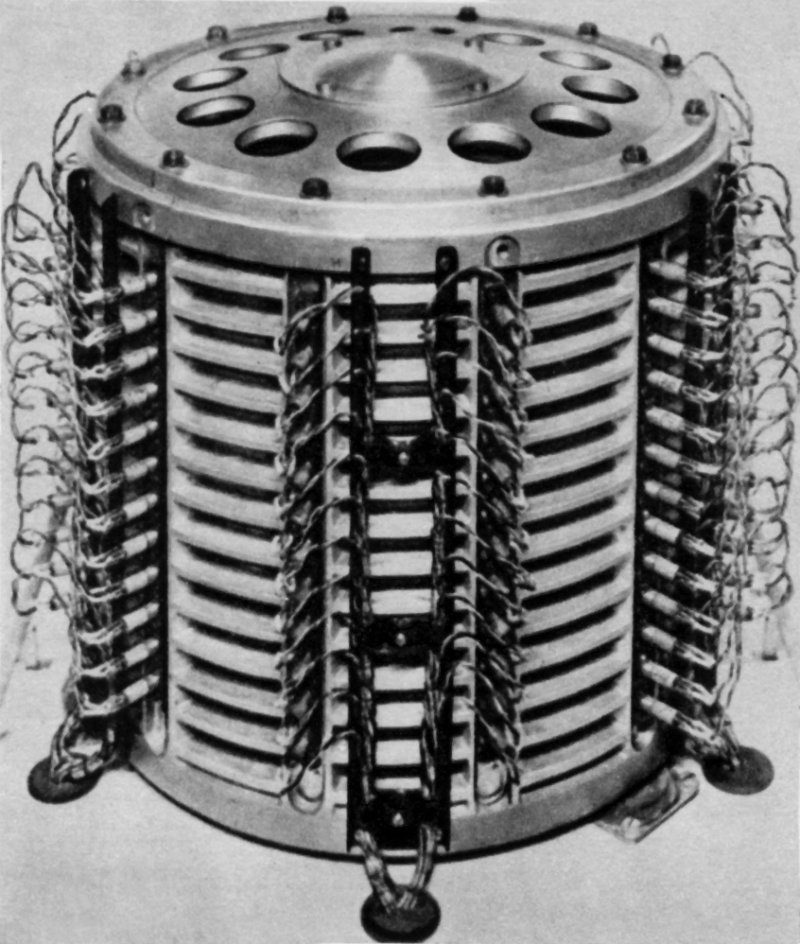

1. DRUM Memory

Gusta Tauschek created Drum memory, the first device that resembled RAM, in 1932. A Drum memory is much better suited to be an HDD or hard disk drive because it does not fully perform as modern RAM. However, back then, there was no need to differentiate between primary working memory and secondary memory; thus, we may still classify Drum memory as the first version of RAM.

A ferromagnetic substance is used to cover the exterior of a drum memory, serving as the store for binary data. Additionally, read-write heads that can input or extract data are positioned above the ferromagnetic surface. When you wish to store binary data in the drum memory, an electromagnetic pulse is generated, and the orientation of the magnetic particle on the surface is changed. We can quickly scan each magnetic particle’s complex orientation along the surface to perform a read.

2. Vacuum Tube Memory

The Williams Tube is the first real grandfather of the RAM, created by Freddie Williams and Tom Kilburn in 1949. It uses cathode ray tube technology, the same as first-generation big, unwieldy TVs. To create the grid patterns for the memory writing, an electron beam is sent, deflected by positively charged coils, and then struck on the phosphor surface.

These grid patterns represent the binary data a computer can read. Since these patterns will fade with time and the electron beams can overlap read-writes in each pattern spot, the Williams Tube is non-volatile. The electron beam deflection method, which is incredibly susceptible to adjacent electrical fields, is the primary weakness of the Williams Tube. Any charge imbalances near the cathode ray tubes can seriously affect write operations. The Manchester Baby computer was the first to use vacuum tube memory, which made it possible for the machine to run programs in June 1948.

3. Magnetic Core Memory

In the 1950s through the 1970s, Magnetic Core memory was regarded as the industry standard random-access memory for computers, largely replacing Drum memory. The hardware consists of several magnetic rings that may store data according to a phenomenon known as Magnetic Hysteresis. Simply put, these rings may store information by changing the magnetization’s direction. By applying an electrical current to the wires that run through the rings, we may alter the polarization of these magnets. When reading, a specific sensing wire is used to identify each core’s charge state and retrieve the appropriate binary data.

4. Static Random Access Memory

After the development of the metal-oxide-semiconductor, or MOS, memory in 1964, things started to change. Compared to magnetic core memory, MOS Memory performed much better while using less power and costing less. What’s more, MOS memory could be reduced to tiny chips that are simple to fit inside a computer. It swiftly dominated the market and rendered core memory obsolete.

In 1963, Robert H. Horman created the first static random-access memory (SRAM), which came after the creation of MOS. John Schmidt created the MOS SRAM a year later. In 1965, when they debuted the SP95 memory chip, IBM used SRAMs for the first time in a commercial product.

5. Dynamic Random Access Memory

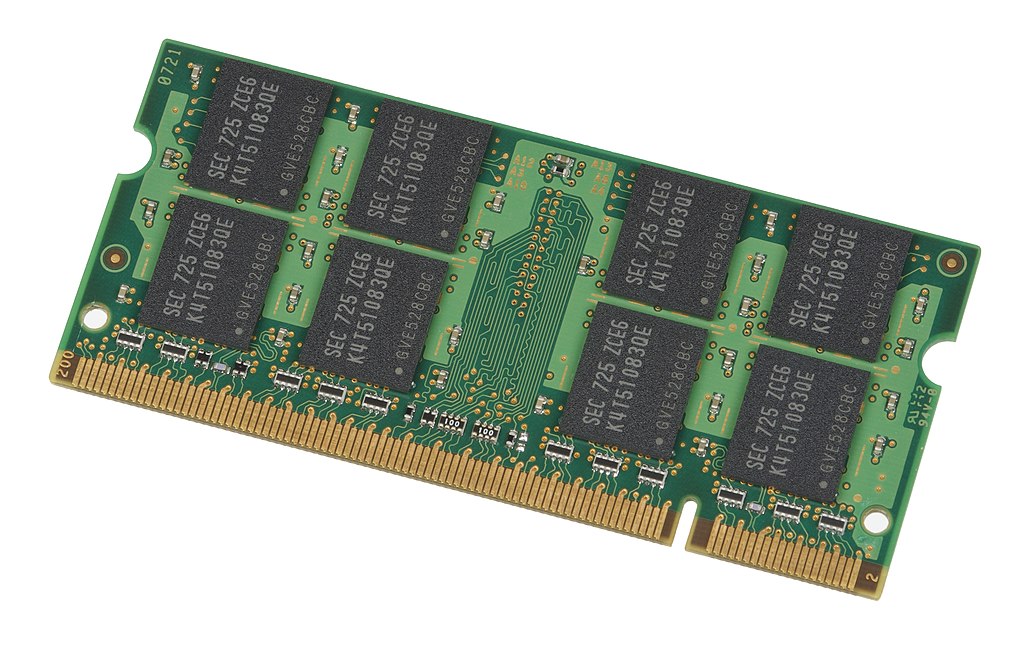

What we now refer to as RAMs were originally DRAMs. It serves most of the computer’s main memory and offers the necessary room for program codes to function effectively. DRAMs have a higher storage density and are considerably smaller. DRAMs are significantly less expensive per bit than SRAMs due to their comparatively straightforward layout.

When Robert H. Dennard was developing the SRAM technology in 1966, the concept of developing DRAMs first came to him. Dennard concluded that a capacitor could be built utilizing the same MOS technology and transistors. When Honeywell introduced the new memory technology in 1969, DRAMs could hold 1000 bits or 1 kbit of data. Unfortunately, the market needed to be more interested in the DRAM 1102, which had numerous flaws. Eventually, Intel, which had previously collaborated with Honeywell, rebuilt the DRAM and produced an entirely new chip, known as 1103, that was marketable and accessible to the general public.

When Mostek debuted the MK4096 4000-bit device in 1973, DRAM technology advanced, and the new DRAM, created by Robert Proebsting, achieved a substantial advancement by employing a successful multiplexed addressing technique. The MK4116, a second edition of Mostek’s new DRAM chip with 16 kbit storage, was released. At one point, the MK4116 chip could control three-quarters of the global DRAM market share due to its popularity at the time.

The DRAM era ended with Samsung’s production of its 4 Gbit last commercially available chip in February 2001. However, the RAM saga continued after 2001. Instead, it signaled the shift to synchronous dynamic random-access memory, or SDRAM, a more sophisticated version of RAM.

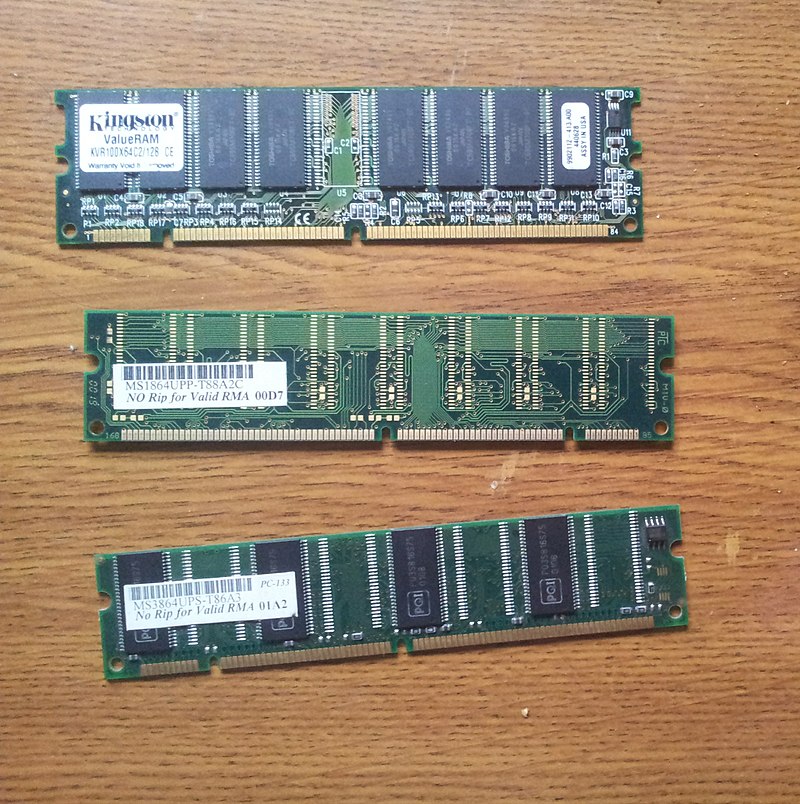

6. Synchronous Dynamic Random Access Memory

The names of SDRAM and DRAM are primarily what distinguishes them from one another. While SDRAMs always maintain synchronization with the computer’s system clock, regular DRAMs work asynchronously concerning that clock. As a result, read-write operations using SDRAM are much faster and more effective than those using DRAMs. To increase performance even more, the memory structure of SDRAM is further divided into several memory banks that permit concurrent accesses.

Coincidentally, Samsung was the first to produce the ridiculously little 16 Mbit KM48L2000 SDRAM chip. Samsung started testing with DDR, or double data rate, technology in June 1998. Three years later, DDR2, which conducts access four times every clock cycle, was developed, doubling the speed of DDR1. However, at this point, most significant RAM producers, including Sony and Toshiba, are concentrating more on creating embedded DRAMs, also known as eDRAMs, rather than jumping on the DDR hype train.

In the end, Samsung developed DDR3 in 2003. Once more, the read-write rate was doubled by DDR3 to 8 accesses per cycle. Although speeds have increased, latency, or CAS latency, continues to impede development significantly. The less latency there is, the better, as it relates to how quickly RAM responds to demands for data access. Unfortunately, if the data rate doubles, the CAS latency rises, which could result in slower SDRAMs having a little lower “actual” latency.

Samsung started selling DDR3 chips in the middle of 2008, with an 8192 Mbit capacity. The chips were a huge success, and many computers then started to employ DDR technology. Samsung continued to improve the DDR3’s capacity and clock speeds. SK Hynix, another relatively new participant in the RAM manufacturing industry, started to enter the DDR3 race. Both Korean technological firms foster the market competition required to advance DDR3 technology regularly.

A 2048-bit DDR4 prototype was originally made public by Hynix in early 2011. The new double data rate technology made a lot of promises, including reduced power consumption and quicker data transfers. Samsung’s DDR4 chip was eventually made available in 2013, although most consumers are still used to DDR3, its predecessor. As a result, significant adoption of DDR4 technology occurred later in 2015. Many manufacturers started outlining their plans for releasing their DDR5 chips; some have even made them available for purchase.

Conclusion

Random Access Memory, one of the most sought-after hardware elements when talking about computing performance, has reached the end of its lifespan with the DDR5—looking back at the development of the RAM. We can see how we transformed massive magnetic core structures that formerly filled a whole room into tiny, portable technology while exponentially expanding its storage capacity and access effectiveness. That only serves to highlight another example of human ingenuity and the tremendous power it possesses.

The transistor-capacitor memory architecture can no longer be developed since capacitors can only really shrink as feature sizes get smaller and smaller. The transition to nanotechnology and work at the molecular level is the obvious next step in the evolution of computing memory. Of course, don’t anticipate it happening anytime in the following five years, as we still have more memory than we truly require.